Download an Https Directory with Authentication in Python

Write your own Python script to automatically download of data from a password-protected https directory

In this post we will focus on how to write our own code to download data from HTTPS directory with folders and data files. We will be using some NASA websites as examples, but the process can be applied in general.

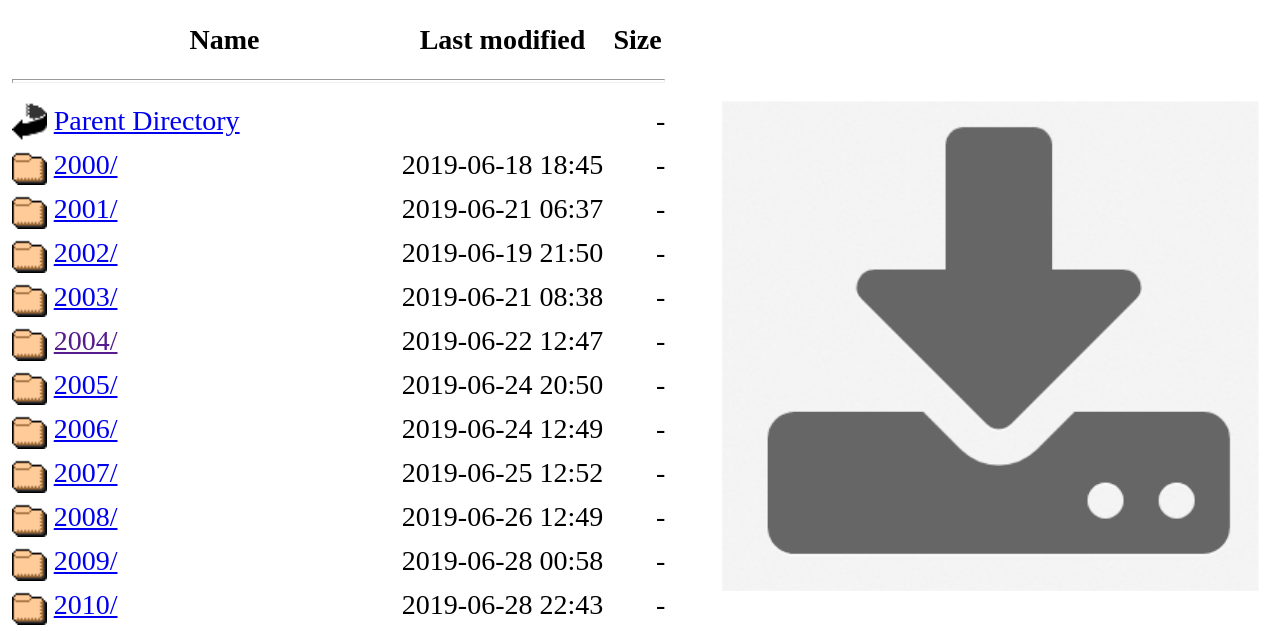

This are the URLs we want to fetch data from:

baseurls = ['https://gpm1.gesdisc.eosdis.nasa.gov/data/GPM_L3/GPM_3IMERGM.06/',

'https://e4ftl01.cr.usgs.gov/MOLA/MYD13C1.006/',

'https://e4ftl01.cr.usgs.gov/MOLT/MOD13C1.006/',

'https://n5eil01u.ecs.nsidc.org/SMAP/SPL3SMP.007/']Step 1: Create a .netrc file to store your password

We can automate the login process with a .netrc file, which enables the use of command-line applications such as cURL or Wget. In Python, the ‘requests’ library will also read those credentials automatically.

In our example, we need to add a username and password for the host ‘urs.earthdata.nasa.gov’, which we got from EOSDIS.

To do this, enter the following in a shell:

cd ~

touch .netrc

echo "machine urs.earthdata.nasa.gov login username_goes_here password password_goes_here" > .netrc

chmod 0600 .netrcThe requests library is pretty powerful and can handle various types of authentication. See for example this reference.

Step 2: List all links from a web directory

We will be using requests for data download, and parsing HTML with StringIO and etree. Make sure to include those libraries:

import requests

from lxml import etree

from io import StringIONow, to create a list of links contained in a url, we can use the following function:

def getLinks(url):

print("Getting links from: " + url)

page = session.get(url)

html = page.content.decode("utf-8")

tree = etree.parse(StringIO(html), parser=etree.HTMLParser())

refs = tree.xpath("//a")

return list(set([link.get('href', '') for link in refs]))This function downloads a web page and parses the HTML content to filter the links contained in it.

Step 3: Classify links into folders and data files

The pages we are scrapping will contain directories — usually each one for a different date. By identifying and processing these dates we could also filter a specific period, but in this example we are fetching the entire catalog.

def isDate(l):

isDate = False

for fmt,substr in [('%Y.%m.%d',l[0:10]), ('%Y',l[0:4])]:

try:

d = datetime.strptime(substr,fmt).date()

return True

except ValueError:

isDate = False

return False

def isHDFFile(l):

ext = ['.HDF5', '.H5', '.HDF']

return any([l.lower().endswith(e.lower()) for e in ext])Step 4: Loop through subdirectories and download all new data files

All that is left now is going trough all subdirectories and get the data files. In our example, we will only go one level down, but the code could be easily modified to deal with more subdirectories.

It is also advisable to create a persistent download session, especially if we are downloading a large number of files. That can be done with a single line of code

session = requests.Session()As we are probably going to run our script quite often, and the files we are fetching rarely get updated on the servers, we would like to avoid overwriting existing files, for efficiency. In this way, it will be easier not just to get data from entire web directory, but also keep it in sync.

for url in baseurls:

session = requests.Session()

basedir = pathlib.PurePath(url).name

links = getLinks(url)

ldates = [l for l in links if isDate(l)]

for d in ldates:

links_date = getLinks(url + d)

l_hdf = [l for l in links_date if isHDFFile(l)]

for f in l_hdf:

folder = basedir + '/' + d

filepath = folder + f

if pathlib.Path(filepath).is_file():

print ("File exists: " + filepath )

else:

print("File doesn't exist: " + filepath )

print("Downloading... " + url + d + f)

f = session.get(url + d + f)

time.sleep(1)

pathlib.Path(folder).mkdir(parents=True, exist_ok=True)

open(filepath, 'wb').write(f.content)Complete script

Find the complete script getData.py in the Github repository of my Python4RemoteSensing project.

If you want to leave comments, don’t hesitate to start an issue, or contact me!

Do you need help with web scrapping?

Writing scripts to download data can be very time-consuming.

If you don’t want to deal with this yourself, there are freelancers who are very efficient at this task, which you can hire online.

Make sure to ask them a question on how they can be of help!